By Saurabh Yadav

Navigating the AI Frontier: The Critical Need for Standardized Agent Protocols

The world of Artificial Intelligence is evolving at an astonishing pace. As AI capabilities expand, we’re moving beyond isolated intelligent systems to a future where AI agents interact, collaborate, and make decisions in increasingly complex environments. This rise of autonomous and semi-autonomous AI agents brings immense promise, but it also highlights a critical challenge: how do these agents communicate effectively and reliably?

The answer lies in the establishment of robust, standardized communication methods. Without them, the full potential of interconnected AI systems remains untapped, leading to fragmentation, inefficiencies, and potential errors.

What is AI Agent Communication?

At its core, AI agent communication refers to the intricate dance of how artificial intelligence agents interact with each other, with humans, and with external systems. This interaction isn’t just about exchanging simple messages; it encompasses a broad spectrum of activities, including:

- Exchanging Information: Sharing data, observations, and insights relevant to their tasks.

- Making Decisions: Collaborating on choices, reaching consensus, or delegating responsibilities.

- Completing Tasks: Working together to achieve shared goals, often by breaking down complex problems into manageable sub-tasks.

The Imperative of Protocols

Think of communication protocols as the rules of engagement in the digital realm. Just as human societies rely on common languages and social norms for effective interaction, AI agents require clearly defined protocols to ensure seamless operation. These protocols are essential for:

- Seamless Interaction: Enabling agents to understand each other’s messages, intentions, and capabilities without ambiguity.

- Efficient Tool Integration: Allowing agents to leverage external tools and services, extending their functionalities and reach.

- Reliable Data Sourcing: Facilitating the secure and accurate exchange of data from various sources, ensuring agents have the information they need to perform their functions.

Introducing A2A and MCP: Emerging Solutions

The good news is that the industry is actively addressing this vital need. Among the various initiatives, A2A (Agent-to-Agent) and MCP (Message Communication Protocol) are two emerging protocols that show significant promise in standardizing AI agent communication. These protocols aim to provide the foundational framework for building truly interoperable and collaborative AI ecosystems.

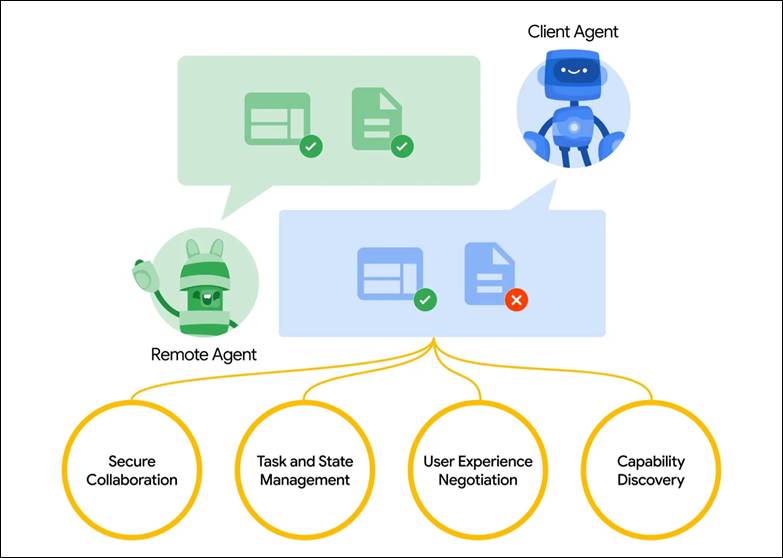

An example Image showing how A2A is an add on to MCP server (source)

The diagram above illustrates a simplified example of how A2A can function as an add-on to an MCP server, facilitating communication between different agents and their associated tools. This hints at the modular and extensible nature required for future AI interactions.

Deeper Dive: Understanding A2A (Agent-to-Agent) Protocol

In our journey to understand standardized AI communication, A2A, or Agent-to-Agent, stands out as a foundational protocol designed to enable seamless interaction directly between AI entities. Launched in April 2025 by Google and a consortium of partners, A2A is engineered to foster direct communication and collaboration among a diverse ecosystem of AI agents.

What is A2A?

A2A is an open protocol specifically built for the direct exchange of information and collaborative efforts between AI agents. Its core focus is to eliminate communication silos and allow intelligent systems to work in concert. Key aspects of A2A include:

- Direct Communication and Collaboration: It prioritizes the ability for agents to talk directly to each other, facilitating real-time coordination and task execution.

- Capability Discovery: A crucial feature is the ability for agents to discover each other’s capabilities. This means an agent can query another to understand what tasks it can perform, what data it can provide, or what services it offers. This self-discovery mechanism is vital for dynamic collaboration.

- Information Exchange: Beyond simple messages, A2A supports a rich exchange of information, enabling agents to share complex data structures and contextual details.

- Multi-Modal Support: Recognizing the diverse nature of AI interactions, A2A supports various communication modalities, including text, audio, and video, making it adaptable to different application scenarios.

- Key Features for Richer Interactions:

- Agent Cards: These are essentially digital “business cards” for AI agents, describing their capabilities, functionalities, and interaction protocols. They are fundamental for capability discovery.

- Task Management: A2A incorporates mechanisms for agents to coordinate and manage tasks collaboratively, from delegation to progress reporting.

- Content Negotiation: This allows agents to agree upon the format and nature of the content they exchange, ensuring compatibility and efficient processing.

How A2A Works: The Mechanics of Agent Interaction

Fig shows The relationship between agent, client, and core A2A capabilities (source)

The “A2A 101” diagram provides a clear visual of the client-server relationship within A2A. Here’s a breakdown of its operational mechanics:

- Client-Remote Agent Relationship:

- Client Agent/A2A client: This is an application or agent that consumes A2A services. Think of it as your “main” agent—the one that initiates tasks, orchestrates workflows, and communicates with other agents to achieve its objectives.

- Remote Agent/A2A server: This is an agent exposing an HTTP endpoint that adheres to the A2A protocol. These are typically supplementary agents that handle the completion of specific tasks or provide specialized services requested by the client agent.

- Initiation and Communication:

A client agent initiates a task by communicating with a remote agent.- The primary communication mechanism leverages JSON-RPC over HTTP(S). This provides a lightweight and efficient way for agents to invoke methods and exchange data securely over standard web protocols.

- For dynamic updates and ongoing information flow, Server-Sent Events (SSE) are used for streaming updates. This is particularly useful for scenarios where a client agent needs to receive continuous feedback or notifications from a remote agent without constant polling.

- Rich, Multi-Part Messages: Agents are not limited to simple text exchanges. A2A supports the exchange of rich, multi-part messages, allowing for the transmission of complex data structures, files, and diverse content types, which is essential for sophisticated AI collaborations.

In essence, A2A provides a structured yet flexible framework for AI agents to discover, interact, and collaborate directly, paving the way for more integrated and intelligent AI ecosystems.

Expanding the Horizon: Introducing MCP (Model Context Protocol)

While A2A focuses on direct agent-to-agent communication, the other vital piece of the puzzle for a robust AI ecosystem is how AI applications interact with the broader external world. This is where MCP, or Model Context Protocol, comes into play. Introduced by Anthropic, MCP is designed specifically to standardize how AI applications connect with external tools, data sources, and systems, providing the necessary context for intelligent operations.

What is MCP?

MCP is an open standard that addresses the crucial need for AI to access and utilize external information and capabilities. Its core emphasis is on providing a standardized way for AI applications to:

- Connect with External Tools: Enabling AI to leverage a wide array of specialized tools and services, from search engines to complex analytical software.

- Access Data Sources: Facilitating seamless retrieval of information from various data repositories, whether local or cloud-based.

- Integrate with External Systems: Allowing AI to interact with and manage other software systems, extending its operational reach.

Key features of MCP include:

- Tools (Actions AI Can Take): MCP defines how AI models can discover and invoke external tools. These tools represent the actions or functionalities that an AI can perform by interacting with external systems.

- Resources (Context Provided to AI): This refers to the various data sources and information streams that provide context to the AI. MCP standardizes how AI can access and interpret this information.

- Prompts (User-Invoked Interactions): MCP also considers user interaction, particularly how user-invoked prompts can trigger AI actions that utilize external tools and resources.

How MCP Works: Bridging AI and the External World

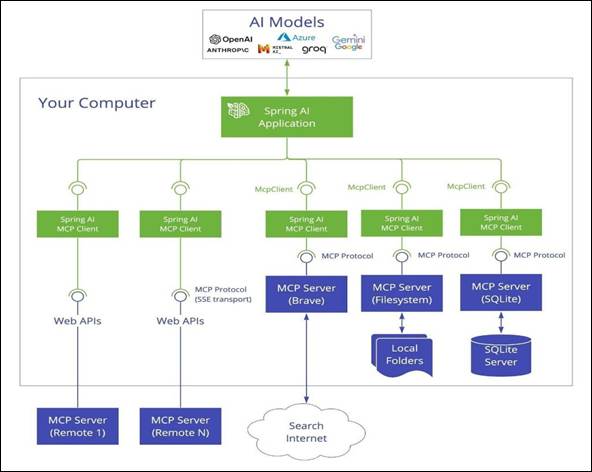

Figure illustrates how a AI Application running on “Your Computer” can interact with various AI Models (OpenAI, Anthropic, Azure, Gemini, Groq) and a diverse set of external resources using the Model Context Protocol (MCP)

The diagram titled “Figure illustrates how an AI Application… using the Model Context Protocol (MCP)” provides an excellent overview of MCP’s architecture. At its heart, MCP enables an AI to connect with diverse data sources via an MCP server, which acts as a crucial facilitator between the AI model and various storage and external systems.

MCP operates through several key components:

- MCP Hosts: These are applications that request information or services from an MCP server. Examples include AI assistants like Claude or even advanced iterations of ChatGPT, which need to pull in real-world data or execute specific actions.

- MCP Clients: These are protocols or modules that manage the communication between the MCP host (the AI application) and the MCP server. They handle the specifics of the data exchange and command execution.

- MCP Servers: These are the backbone of the MCP ecosystem. MCP servers are programs responsible for exposing functionalities that allow AI applications to access files, query databases, and interact with various APIs. As shown in the diagram, an MCP server can be configured for different transport mechanisms (like SSE transport for Brave) or to interact with different storage types (Filesystem, SQLite).

- Data Sources: These are the ultimate endpoints from which real-time information is retrieved. They can be local files, cloud-based databases, web APIs, or even general internet searches. MCP provides a structured way for AI to tap into these diverse sources.

In essence, MCP acts as a sophisticated translator and router, allowing AI models to transcend their internal knowledge base and dynamically interact with the vast amount of information and functionalities available in the external world. This capability is paramount for AI applications that need to be contextually aware, perform real-world actions, and provide up-to-date responses.

Together, A2A and MCP form a powerful duo, addressing both the internal communication needs between AI agents and the external connectivity requirements for AI applications.

A2A vs. MCP: Understanding Their Complementary Roles

While both A2A and MCP are crucial for the advancement of AI agent protocols, they serve distinct yet complementary purposes. Understanding their individual strengths and design philosophies is key to appreciating how they collectively build a more robust and capable AI ecosystem. This comparison clarifies when and where each protocol shines.

| Category | A2A (Agent-to-Agent) | MCP (Model Context Protocol) |

| Primary Goal | Enable inter-agent task exchange | Enable LLMs to access external tools or context |

| Designed For | Communication between autonomous agents | Enhancing single-agent capabilities during inference |

| Focus | Multi-agent workflows, coordination, delegation | Dynamic tool usage, context augmentation |

| Execution model | Agents send/receive tasks and artifacts | LLM selects and executes tools inline during reasoning |

| Security | OAuth 2.0, API keys, declarative scopes | Handled at application integration layer |

| Developer Role | Build agents that expose tasks and artifacts via endpoints | Define structured tools and context the model can use |

| Ecosystem Partners | Google, Salesforce, SAP, LangChain, etc. | Anthropic, with emerging adoption in tool-based LLM UIs |

In essence, A2A is about orchestration between distinct AI entities, enabling them to form complex collaborative networks. MCP, on the other hand, is about empowering a single AI application with external capabilities, giving it the “eyes, ears, and hands” to interact with the real world. Together, they represent a powerful leap towards truly intelligent and interconnected AI systems.

The Path Forward: Benefits and Challenges of A2A & MCP

Let’s explore the key benefits and the important concerns associated with these transformative protocols.

Benefits and Advantages of A2A and MCP

The widespread adoption of open protocols like A2A and MCP offers several significant benefits for the AI ecosystem, pushing us towards a more integrated and intelligent future:

- Enhanced Interoperability: This is perhaps the most significant advantage. Open protocols enable seamless communication and collaboration between AI agents and applications built by different developers and organizations. Just as the internet relies on open standards for global connectivity, AI systems can now truly talk to each other, breaking down proprietary silos.

- Increased Flexibility and Decentralization: A2A, in particular, fosters a more decentralized AI ecosystem. Specialized agents can be developed and deployed independently, yet still contribute to a larger collaborative network. This reduces reliance on monolithic AI applications and promotes a more agile development environment.

- Dynamic Capability Discovery and Utilization (A2A): A key strength of A2A is the ability for agents to dynamically discover and utilize the capabilities of other agents at runtime. This “plug-and-play” functionality leads to more flexible and adaptive problem-solving, as agents can find and engage the best-suited collaborators for any given task.

- Standardized Communication and Tool Integration: Both A2A and MCP provide standardized frameworks for communication and tool access. This significantly reduces the need for custom integrations, which can be time-consuming and prone to errors. The result is greater efficiency and innovation across the entire AI landscape.

- Fostering Specialization and Efficiency: A2A encourages the development of highly specialized agents through collaboration. Each agent can focus on its niche expertise. Similarly, MCP allows agents to dynamically leverage the most suitable external tools for specific tasks, enhancing overall efficiency by extending the AI’s capabilities on demand.

- Building Blocks for Autonomous Systems: The combined use of A2A and MCP lays the foundation for truly autonomous software systems where AI agents can not only discover resources (other agents and tools) but also coordinate their actions, and collectively solve complex problems with minimal human intervention. This moves us closer to self-organizing and self-optimizing AI environments.

Challenges and Concerns

Despite their immense potential, the adoption and scaling of A2A and MCP also present several challenges that developers and organizations must address:

- Increased Testing Complexity: Managing and testing the interactions between multiple autonomous agents and diverse tools in a distributed system significantly increases complexity compared to monolithic applications. Reproducing edge cases and debugging failures in such an environment can become particularly difficult.

- Heightened Security Risks: The expanded number of interacting components and the involvement of third-party services widens the attack surface. Ensuring secure authentication, authorization, and robust data handling across multiple agents and tools becomes critically important to prevent vulnerabilities and data breaches.

- State Management Challenges: Maintaining consistent state and context across multiple autonomous agents, especially when they operate with their own internal contexts, poses a significant challenge. Handling partial failures gracefully in distributed workflows and ensuring data integrity requires careful consideration and sophisticated error handling mechanisms.

- Reasoning and Computational Overhead: The process of agents discovering each other, negotiating collaboration, and reasoning about how to utilize tools and other agents can introduce significant computational overhead. Optimizing these interactions for efficiency is crucial, particularly in real-time or resource-constrained environments.

- Hidden Complexity and Debugging Difficulties: Relying on protocols and external services can create “black boxes” in the system. When an issue arises, it can be harder to understand the root cause of errors and debug issues, as the problem might reside within an external tool, another agent, or the communication protocol itself. Effective logging, monitoring, and tracing across the entire distributed system are essential for diagnosing and resolving problems.

Addressing these challenges will be crucial for the successful and widespread adoption of A2A and MCP. However, the benefits they offer in terms of interoperability, flexibility, and autonomous capabilities are too significant to ignore.