By Pooja Nirmal

Understanding customer sentiment has never been more critical—or more challenging

Every day, millions of customers share their opinions across social media, review sites, and forums. A single viral complaint can damage a brand’s reputation overnight, while positive feedback can drive sales and loyalty. Yet most companies struggle to analyze this feedback at scale. Traditional methods are either too slow, too inaccurate, or both.

Enter RoBERTa—a transformer-based model that’s changing the game for sentiment analysis. With accuracy rates exceeding 98%, it outperforms traditional approaches while remaining practical for real-world applications. If you’re looking to understand customer sentiment efficiently and accurately, RoBERTa might be exactly what you need.

What Makes RoBERTa Special?

RoBERTa (Robustly Optimized BERT Pretraining Approach), developed by Facebook AI in 2019, is an enhanced version of BERT (Bidirectional Encoder Representations from Transformers). While it shares the same core architecture as BERT, RoBERTa’s pre-training process has been significantly optimized to deliver superior performance across various NLP tasks, including sentiment analysis, text classification, question answering, and extractive summarization.

The best part? RoBERTa is freely available on the Hugging Face Transformers library, making it accessible to developers and data scientists worldwide.

Six Key Improvements Over BERT

RoBERTa isn’t just a minor tweak—it represents a fundamental rethinking of how to train transformer models effectively. Here are the crucial enhancements:

1. Massively Expanded Training Dataset

RoBERTa was trained on approximately 160GB of text data, drawn from CommonCrawl, Wikipedia, BookCorpus, and other sources. That’s 10 times larger than BERT’s 16GB training corpus, allowing the model to capture far more nuanced language patterns.

2. Larger Batch Sizes

By training with significantly bigger batches, RoBERTa achieved more stable and effective gradient updates, leading to better convergence and performance.

3. Extended Training Duration

RoBERTa was trained for substantially more steps than BERT, giving it time to learn deeper and more sophisticated language representations.

4. Dynamic Masking Strategy

Unlike BERT’s fixed masked tokens, RoBERTa applies masking randomly at each training epoch. This means the model sees more variations of the same text, improving its ability to generalize.

5. Consistent Longer Input Sequences

RoBERTa uses sequences up to 512 tokens consistently throughout training, allowing it to handle longer contexts more effectively.

6. Removal of Next Sentence Prediction (NSP)

Perhaps the most interesting change: RoBERTa eliminated the Next Sentence Prediction task that BERT used. While NSP was intended to help with tasks like question answering and natural language inference, experiments showed it didn’t improve performance and sometimes even hurt it. By removing NSP, RoBERTa could dedicate all its capacity to masked language modeling, which proved sufficient for downstream tasks.

Why Sentiment Analysis Matters More Than Ever

While RoBERTa excels at various NLP tasks, sentiment analysis has become particularly crucial in today’s business landscape. Customer feedback on platforms like Google Reviews, Amazon, Twitter, Facebook, and Reddit provides invaluable insights into brand reputation, product performance, and customer satisfaction.

With social media generating unprecedented volumes of customer feedback daily, companies need automated solutions that can process thousands of comments accurately and quickly. Manual review is no longer feasible at scale, and traditional sentiment analysis tools often miss the nuances of human expression—sarcasm, context, and subtle emotions.

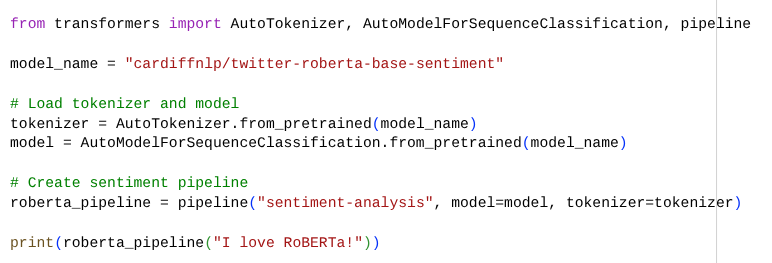

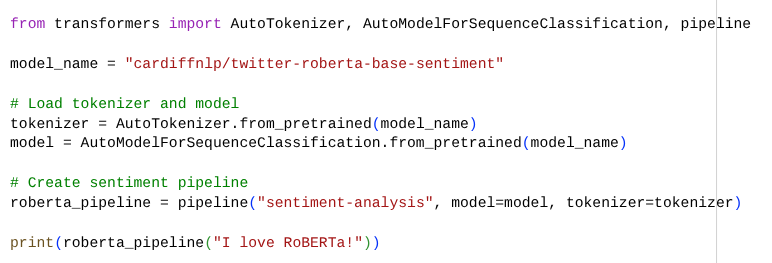

Getting Started with RoBERTa: A Simple Implementation

Here’s a simple example of how you can load and use the RoBERTa sentiment analysis model from Hugging Face:

To determine the most effective approach for sentiment analysis, we conducted a comprehensive comparative study pitting traditional techniques against modern transformer models. We tested:

- Traditional Methods: VADER, TextBlob, Logistic Regression

- Transformer Models: Fine-tuned BERT, RoBERTa

We used Reddit data for our evaluation because it closely resembles the authentic, unfiltered feedback found on Google Reviews, Amazon, and other platforms. The results were clear:

With more than 98% accuracy, RoBERTa significantly outperformed all other sentiment analysis techniques. Even with a modest computational setup, RoBERTa analyzed approximately 200 Reddit posts in roughly 2 minutes, demonstrating its suitability for large-scale sentiment analysis when applied to thousands of reviews or social media comments.

The Future of Sentiment Analysis

Sentiment analysis is evolving rapidly. With the rise of even larger language models like GPT-4, domain-specific versions of RoBERTa, and multilingual models, the accuracy and flexibility of these tools will only continue to improve. However, RoBERTa has found a sweet spot between accuracy and efficiency that makes it one of the most reliable and practical choices for sentiment analysis today.